Source

OECD (2026), OECD Digital Education Outlook 2026: Exploring Effective Uses of Generative AI in Education, OECD Publishing, Paris, https://doi.org/10.1787/062a7394-en..

Summary

This flagship OECD report examines how generative artificial intelligence (GenAI) is reshaping education systems, with a strong emphasis on evidence-based uses that enhance learning, teaching, assessment, and system capacity. Drawing on international research, policy analysis, and design experiments, the report moves beyond hype to identify where GenAI adds genuine educational value and where it introduces risks. It highlights GenAI’s potential to support personalised learning, high-quality feedback, teacher productivity, and system-level efficiency, while cautioning against uses that displace cognitive effort or undermine deep learning.

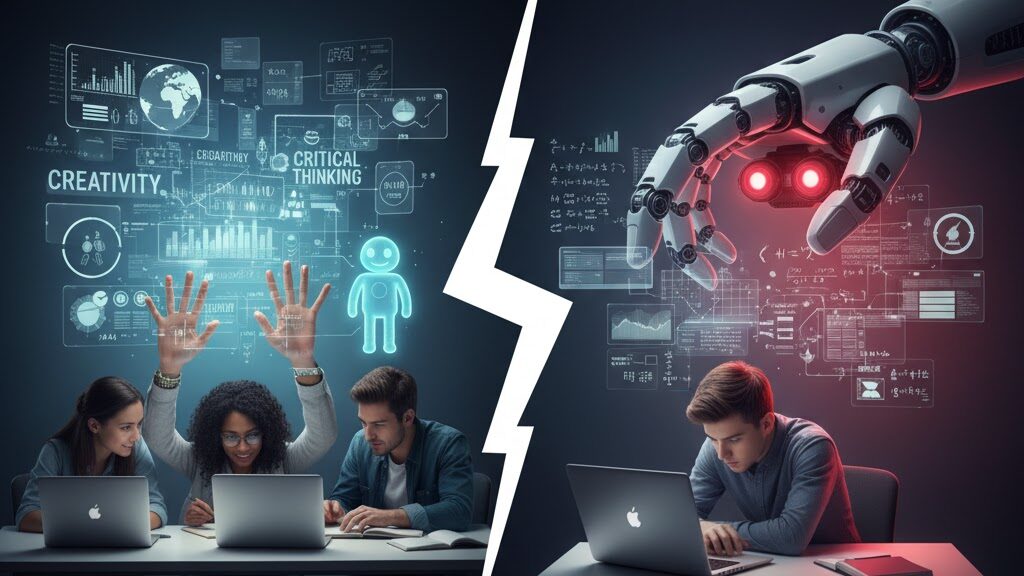

A central theme is the need for hybrid human–AI approaches that preserve teacher autonomy, learner agency, and professional judgement. The report shows that GenAI can be effective when embedded in pedagogically grounded designs, such as intelligent tutoring, formative feedback, and collaborative learning, but harmful when used as a shortcut to answers. It also reviews national policy responses, noting a global shift towards targeted guidance, AI literacy frameworks, and proportionate regulation aligned with ethical principles, transparency, and accountability. The report calls for coordinated strategies that integrate curriculum reform, assessment redesign, professional development, and governance to ensure GenAI strengthens, rather than substitutes, human learning and expertise.

Key Points

- GenAI can enhance personalised learning and feedback at scale when pedagogically designed.

- Overreliance on GenAI risks reducing cognitive engagement and deep learning.

- Hybrid human–AI models are essential to preserve teacher and learner agency.

- Generative AI should support formative assessment rather than replace judgement.

- AI literacy is a foundational skill for students, teachers, and leaders.

- Teacher autonomy and professional expertise must be protected in AI integration.

- Evidence-informed design is critical to avoid unintended learning harms.

- National policies increasingly favour guidance over blanket bans.

- Ethical principles, transparency, and accountability underpin responsible use.

- Cross-system collaboration strengthens sustainable AI adoption.

Conclusion

The OECD Digital Education Outlook 2026 positions generative AI as a powerful but conditional force in education. Its impact depends not on the technology itself, but on how thoughtfully it is designed, governed, and integrated into learning ecosystems. By prioritising human-centred, evidence-based, and ethically grounded approaches, education systems can harness GenAI to improve quality and equity while safeguarding the core purposes of education.

Keywords

URL

https://www.oecd.org/en/publications/oecd-digital-education-outlook-2026_062a7394-en.html

Summary generated by ChatGPT 5.2