Source

Gulf News

Summary

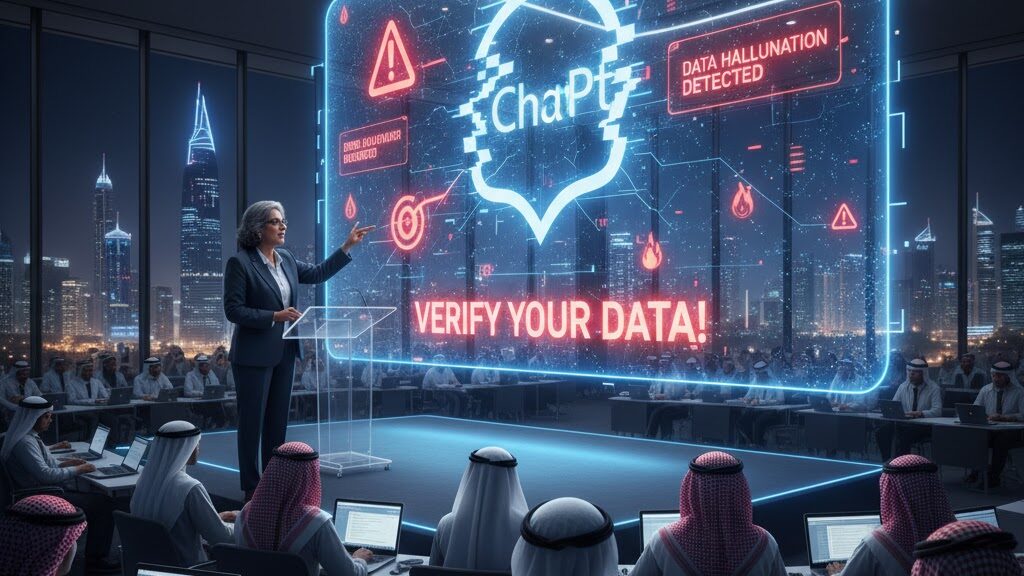

Dr Wafaa Al Johani, Dean of Batterjee Medical College in Dubai, cautioned students against over-reliance on generative AI tools like ChatGPT during the Gulf News Edufair Dubai 2025. Speaking on the panel “From White Coats to Smart Care: Adapting to a New Era in Medicine,” she emphasised that while AI is transforming medical education, it can also produce false or outdated information—known as “AI hallucination.” Al Johani urged students to verify all AI-generated content, practise ethical use, and develop AI literacy. She stressed that AI will not replace humans but will replace those who fail to learn how to use it effectively.

Key Points

- AI is now integral to medical education but poses risks through misinformation.

- ChatGPT and similar tools can generate false or outdated medical data.

- Students must verify AI outputs and prioritise ethical use of technology.

- AI literacy, integrity, and continuous learning are essential for future doctors.

- Simulation-based and hybrid training models support responsible tech adoption.

Keywords

URL

Summary generated by ChatGPT 5