Source

The New York Times

Summary

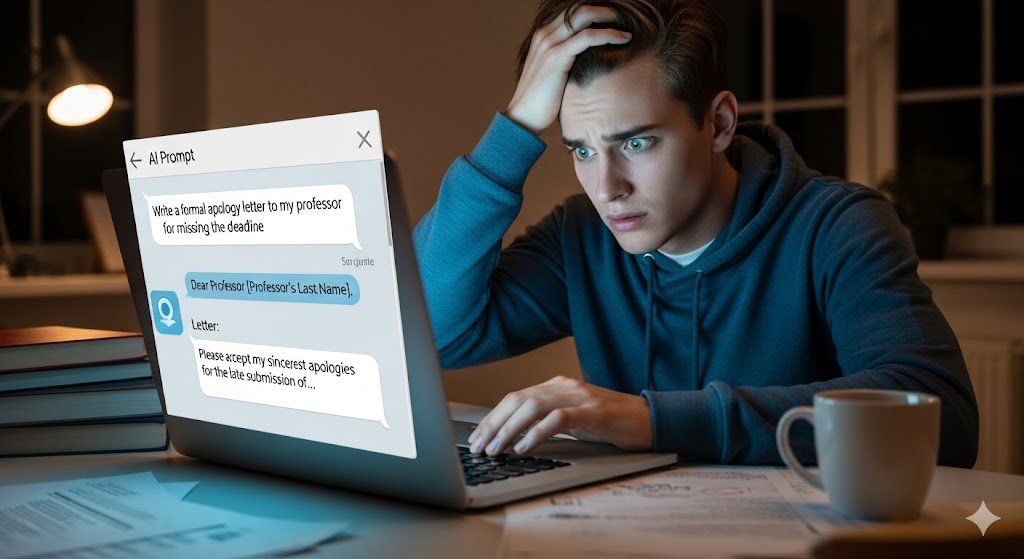

At the University of Illinois Urbana–Champaign, over 100 students in an introductory data science course were caught using artificial intelligence both to cheat on attendance and to generate apology emails after being discovered. Professors Karle Flanagan and Wade Fagen-Ulmschneider identified the misuse through digital tracking tools and later used the incident to discuss academic integrity with their class. The identical AI-written apologies became a viral example of AI misuse in education. While the university confirmed no disciplinary action would be taken, the case underscores the lack of clear institutional policy on AI use and the growing tension between student temptation and ethical academic practice.

Key Points

- Over 100 Illinois students used AI to fake attendance and write identical apologies.

- Professors exposed the incident publicly to promote lessons on academic integrity.

- No formal sanctions were applied as the syllabus lacked explicit AI-use rules.

- The case reflects universities’ struggle to define ethical AI boundaries.

- Highlights the normalisation and risks of generative AI in student behaviour.

Keywords

URL

https://www.nytimes.com/2025/10/29/us/university-illinois-students-cheating-ai.html

Summary generated by ChatGPT 5