Source

The Conversation

Summary

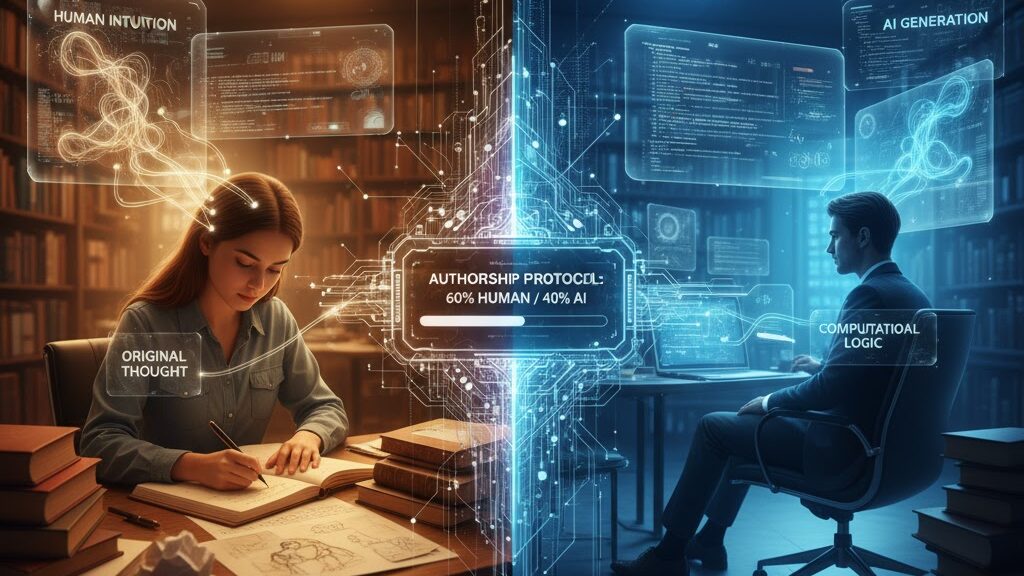

Eli Alshanetsky, a philosophy professor at Temple University, warns that as AI-generated writing grows increasingly polished, the link between human reasoning and authorship is at risk of dissolving. To preserve academic and professional integrity, his team is piloting an “AI authorship protocol” that verifies human engagement during the creative process without resorting to surveillance or detection. The system embeds real-time reflective prompts and produces a secure “authorship tag” confirming that work aligns with specified AI-use rules. Alshanetsky argues this approach could serve as a model for ensuring accountability and trust across education, publishing, and professional fields increasingly shaped by AI.

Key Points

- Advanced AI threatens transparency around human thought in writing and decision-making.

- A new authorship protocol links student output to authentic reasoning.

- The system uses adaptive AI prompts and verification tags to confirm engagement.

- It avoids intrusive monitoring by building AI-use terms into the submission process.

- The model could strengthen trust in professions dependent on human judgment.

Keywords

URL

Summary generated by ChatGPT 5