Source

Inside Higher Ed

Summary

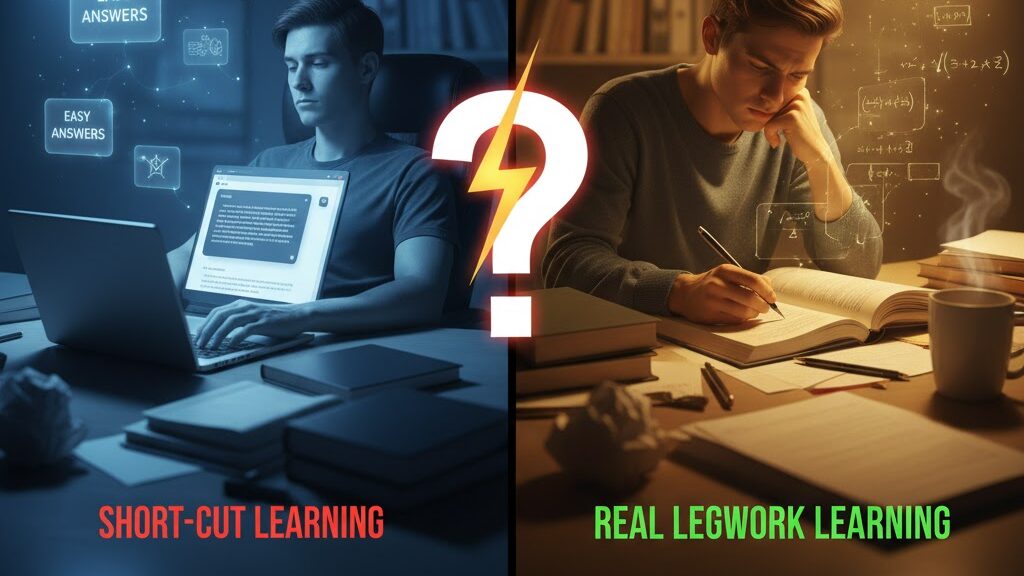

Philosophy instructor Lily Abadal argues that the traditional take-home essay has long been failing as a measure of critical thinking—an issue made undeniable by the rise of generative AI. Instead of abandoning essays altogether, she advocates for “slow-thinking pedagogy”: a semester-long, structured, in-class writing process that replaces rushed, last-minute submissions with deliberate research, annotation, outlining, drafting and revision. Her scaffolded model prioritises depth over content coverage and cultivates intellectual virtues such as patience, humility and resilience. Abadal contends that meaningful writing requires time, struggle and independence—conditions incompatible with AI shortcuts—and calls for designated AI-free spaces where students can practise genuine thinking and writing.

Key Points

- Traditional take-home essays often reward superficial synthesis rather than deep reasoning.

- AI exposes existing weaknesses by enabling polished but shallow student work.

- “Slow-thinking pedagogy” uses structured, in-class writing to rebuild genuine engagement.

- Scaffolded steps—research, annotation, thesis development, outlining, drafting—promote real understanding.

- Protecting AI-free spaces supports intellectual virtues essential for authentic learning.

Keywords

URL

https://www.insidehighered.com/opinion/career-advice/teaching/2025/11/07/way-save-essay-opinion

Summary generated by ChatGPT 5