Source

TechRadar

Summary

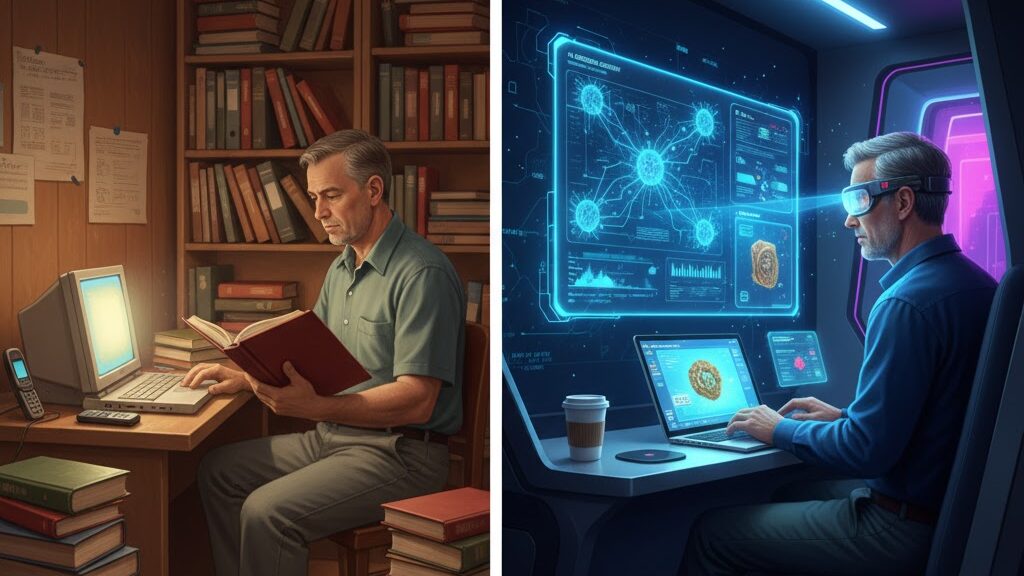

Tech writer Paul Hatton reflects on how AI-driven tools have transformed the student experience since his own university days. Testing Genio Notes, an AI-powered note-taking app, he explores how technology now supports learning through features like real-time transcription, searchable notes, automated lecture summaries and quizzes. The app’s design reflects a shift toward integrated, AI-assisted study methods that enhance engagement and retention. While praising its accuracy and convenience, Hatton notes subscription costs and limited organisational options as drawbacks. His personal experiment captures the contrast between analogue education and today’s AI-augmented learning environment.

Key Points

- Genio Notes uses AI to record, transcribe and organise class content.

- Features like “Outline” and “Quiz Me” automate revision and knowledge checks.

- The app enhances accessibility and efficiency in study routines.

- Hatton highlights the growing normalisation of AI-assisted learning.

- Some limitations remain, including cost and folder structure flexibility.

Keywords

URL

Summary generated by ChatGPT 5