by Sue Beckingham, NTF PFHEA – Sheffield Hallam University and Peter Hartley NTF – Edge Hill University

Estimated reading time: 8 minutes

The GenAI industry regularly proclaims that the ‘next release’ of the chatbot of your choice will get closer to its ultimate goal – Artificial General Intelligence (AGI) – where AI can complete the widest range of tasks better than the best humans.

Are we providing sufficient help and support to our colleagues and students to understand and confront the implications of this direction of travel?

Or is AGI either an improbable dream or the ultimate threat to humanity?

Along with many (most?) GenAI users, we have seen impressive developments but not yet seen apps demonstrating anything close to AGI. OpenAI released GPT-5 in 2025 and Sam Altman (CEO) enthused: “GPT-5 is the first time that it really feels like talking to an expert in any topic, like a PhD-level expert.” But critical reaction to this new model was very mixed and he had to backtrack, admitting that the launch was “totally screwed up”. Hopefully, this provides a bit of breathing space for Higher Education – an opportunity to review how we encourage staff and students to adopt an appropriately critical and analytic perspective on GenAI – what we would call ‘critical AI literacy’.

Acknowledging the costs of Generative AI

Critical AI literacy involves understanding how to use GenAI responsibly and ethically – knowing when and when not to use it, and the reasons why. One elephant in the room is that GenAI incurs costs, and we need to acknowledge these.

Staff and students should be aware of ongoing debates on GenAI’s environmental impact, especially given increasing pressures to develop GenAI as your ‘always-on/24-7’ personal assistant. Incentives to treat GenAI as a ‘free’ service have increased with OpenAI’s move into education, offering free courses and certification. We also see increasing pressure to integrate GenAI into pre-university education, as illustrated by the recent ‘Back to School’ AI Summit 2025 and accompanying book, which promises a future of ‘creativity unleashed’.

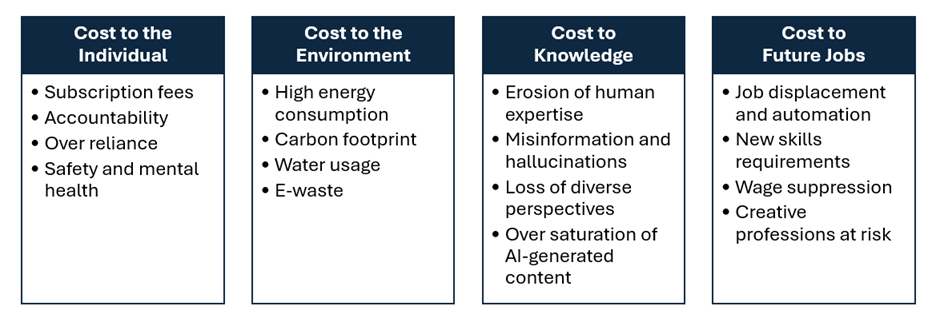

We advocate a multi-factor definition of the ‘costs’ of GenAI so we can debate its capabilities and limitations from the broadest possible perspective. For example, we must evaluate opportunity costs to users. Recent research, including brain scans on individual users, found that over-use of GenAI (or specific patterns of use) can have definite negative impact on users’ cognitive capacities and performance, including metacognitive laziness and cognitive debt. We group costs into four key areas: cost to the individual, to the environment, to knowledge and cost to future jobs.

(Beckingham and Hartley, 2025)

Cost to the individual

Fees: subscription fees for GenAI tools range from free for the basic version through to different levels of paid upgrades (Note: subscription tiers are continually changing). Premium models such as enterprise AI assistants are costly, limiting access to businesses or high-income users.

Accountability: Universities must provide clear guidelines on what can and cannot be shared with these tools, along with the concerns and implications of infringing copyright.

Over-reliance: Outcomes for learning depend on how GenAI apps are used. If students rely on AI-generated content too heavily or exclusively, they can make poor decisions, with a detrimental effect on skills.

Safety and mental health: Increased use of personal assistants providing ‘personal advice’ for socioemotional purposes can lead to increased social isolation

Cost to the environment

Energy consumption – The infrastructure used for training and deploying Large Language Models (LLMs) requires millions of GPU hours to train, and increases substantially for image generation. The growth of data centres also creates concerns for energy supply.

Emissions and carbon footprint – Developing the technology creates emissions through the mining, manufacturing, transport and recycling processes

Water consumption – Water needed for cooling in the data centres equates to millions of gallons per day

e-Waste – This includes toxic materials (e.g. lead, barium, arsenic and chromium) in components within ever-increasing LLM servers. Obsolete servers generate substantial toxic emissions if not recycled properly.

Cost to knowledge

Erosion of expertise – Data is trained on information publicly available on the internet, from formal partnerships with third parties, and information that users or human trainers and researchers provide or generate.

Ethics – Ethical concerns highlight the lived experiences of those employed in data annotation and content moderation of text, images and video to remove toxic content.

Misinformation – Indiscriminate data scraping from blogs, social media, and news sites, coupled with text entered by users of LLMs, can result in ‘regurgitation’of personal data, hallucinations and deepfakes.

Bias – Algorithmic bias and discrimination occurs when LLMs inherit social patterns, perpetuating stereotypes relating to gender, race, disability and protected characteristics

Cost to future jobs

Job displacement – GenAI is “reshaping industries and tasks across all sectors”, driving business transformation. But will these technologies replace rather than augment human work?

Job matching – Increased use of AI in recruitment and by jobseekers creates risks that GenAI is misrepresenting skills. This creates challenges for job-seeker profile analysers to accurately identify skills with candidates that can genuinely evidence them.

New skills – Reskilling and upskilling in AI and big data tops the list of fastest-growing workplace skills. A lack of opportunity to do so can lead to increased unemployment and inequality.

Wage suppression – Workers with skills that enable them to use AI may see their productivity and wages increase, whereas those who do not may see their wages decrease.

The way forward

We can only develop AI literacy by actively involving our student users. Previously we have argued that institutions/faculties should establish ‘collaborate sandpits’ offering opportunities for discussion and ‘co-creation’. Staff and students need space for this so that they can contribute to debates on what we really mean by ‘responsible use of GenAI’ and develop procedures to ensure responsible use. This is one area where collaborations/networks like GenAI N3 can make a significant contribution.

Sadly, we see too many commentaries which downplay, neglect or ignore GenAI’s issues and limitations. For example, the latest release from OpenAI – Sora 2 – offers text to video and has raised some important challenges to copyright regulations. There is also the continuing problem of hallucinations. Despite recent claims of improved accuracy, GenAI is still susceptible. But how do we identify and guard against untruths which are confidently expressed by the chatbot?

We all need to develop a realistic perspective on GenAI’s likely development. The pace of technical change (and some rather secretive corporate habits) makes this very challenging for individuals, so we need proactive and co-ordinated approaches by course/programme teams. The practical implications of this discussion is that we all need to develop a much broader understanding of GenAI than a simple ‘press this button’ approach.

Reference

Beckingham, S. and Hartley, P., (2025). In search of ‘Responsible’ Generative AI (GenAI). In: Doolan M.A. and Ritchie, L. eds. Transforming teaching excellence: Future proofing education for all. Leading Global Excellence in Pedagogy, Volume 3. UK: IFNTF Publishing. ISBN 978-1-7393772-2-9 (ebook). https://amzn.eu/d/gs6OV8X

Sue Beckingham

Associate Professor Learning and Teaching

Sheffield Hallam University

Sue Beckingham is an Associate Professor in Learning and Teaching, Sheffield Hallam University. Externally she is a Visiting Professor at Arden University and a Visiting Fellow at Edge Hill University. She is also a National Teaching Fellow, Principal Fellow of the Higher Education Academy and Senior Fellow of the Staff and Educational Developers Association. Her research interests include the use of technology to enhance active learning; and has published and presented this work internationally as an invited keynote speaker. Recent book publications Using Generative AI Effectively on Higher Education: Sustainable and Ethical Practices for Learning Teaching and Assessment.

Peter Hartley

Visiting Professor

Edge Hill University

Peter Hartley is now Higher Education Consultant, and Visiting Professor at Edge Hill University, following previous roles as Professor of Education Development at University of Bradford and Professor of Communication at Sheffield Hallam University. National Teaching Fellow since 2000, he has promoted new technology in education, now focusing on applications/implications of Generative AI, co-editing/contributing to the SEDA/Routledge publication Using Generative AI Effectively in Higher Education (2024; paperback edition 2025). He has also produced several guides and textbooks for students (e.g. co-author of Success in Groupwork 2nd Edn ). Ongoing work includes programme assessment strategies; concept mapping and visual thinking.