by Jonathan Sansom – Director of Digital Strategy, Hills Road Sixth Form College, Cambridge

Estimated reading time: 5 minutes

At Hills Road, we’ve been living in the strange middle ground of generative AI adoption. If you charted its trajectory, it wouldn’t look like a neat curve or even the familiar ‘hype cycle’. It’s more like a tangled ball of wool: multiple forces pulling in competing directions.

The Forces at Play

Our recent work with Copilot Agents has made this more obvious. If we attempt a force analysis, the drivers for GenAI adoption are strong:

- The need to equip students and staff with future-ready skills.

- Policy and regulatory expectations, from DfE and Ofsted, to show assurance around AI integration.

- National AI strategies that frame this as an essential area for investment.

- The promise of personalised learning and workload reduction.

- A pervasive cultural hype, blending existential narratives with a relentless ‘AI sales’ culture.

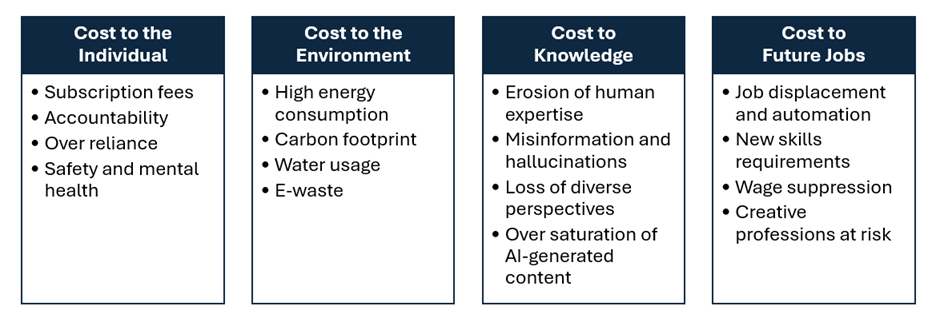

But there are also significant restraints:

- Ongoing academic integrity concerns.

- GDPR and data privacy ambiguity.

- Patchy CPD and teacher digital confidence.

- Digital equity and access challenges.

- The energy cost of AI at scale.

- Polarisation of educator opinion, and staff change fatigue.

The result is persistent dissonance. AI is neither fully embraced nor rejected; instead, we are all negotiating what it might mean in our own settings.

Educator-Led AI Design

One way we’ve tried to respond is through educator-led design. Our philosophy is simple: we shouldn’t just adopt GenAI; we must adapt it to fit our educational context.

That thinking first surfaced in experiments on Poe.com, where we created an Extended Project Qualification (EPQ) Virtual Mentor. It was popular, but it lived outside institutional control – not enterprise and not GDPR-secure.

So in 2025 we have moved everything in-house. Using Microsoft Copilot Studio, we created 36 curriculum-specific agents, one for each A Level subject, deployed directly inside Teams. These agents are connected to our SharePoint course resources, ensuring students and staff interact with AI in a trusted, institutionally managed environment.

Built-in Pedagogical Skills

Rather than thinking of these agents as simply ‘question answering machines’, we’ve tried to embed pedagogical skills that mirror what good teaching looks like. Each agent is structured around:

- Explaining through metaphor and analogy – helping students access complex ideas in simple, relatable ways.

- Prompting reflection – asking students to think aloud, reconsider, or connect their ideas.

- Stretching higher-order thinking – moving beyond recall into analysis, synthesis, and evaluation.

- Encouraging subject language use – reinforcing terminology in context.

- Providing scaffolded progression – introducing concepts step by step, only deepening complexity as students respond.

- Supporting responsible AI use – modelling ethical engagement and critical AI literacy.

These skills give the agents an educational texture. For example, if a sociology student asks: “What does patriarchy mean, but in normal terms?”, the agent won’t produce a dense definition. It will begin with a metaphor from everyday life, check understanding through a follow-up question, and then carefully layer in disciplinary concepts. The process is dialogic and recursive, echoing the scaffolding teachers already use in classrooms.

The Case for Copilot

We’re well aware that Microsoft Copilot Studio wasn’t designed as a pedagogical platform. It comes from the world of Power Automate, not the classroom. In many ways we’re “hijacking” it for our purposes. But it works.

The technical model is efficient: one Copilot Studio authoring licence, no full Copilot licences required, and all interactions handled through Teams chat. Data stays in tenancy, governed by our 365 permissions. It’s simple, secure, and scalable.

And crucially, it has allowed us to position AI as a learning partner, not a replacement for teaching. Our mantra remains: pedagogy first, technology second.

Lessons Learned So Far

From our pilots, a few lessons stand out:

- Moving to an in-tenancy model was essential for trust.

- Pedagogy must remain the driver – we want meaningful learning conversations, not shortcuts to answers.

- Expectations must be realistic. Copilot Studio has clear limitations, especially in STEM contexts where dialogue is weaker.

- AI integration is as much about culture, training, and mindset as it is about the underlying technology.

Looking Ahead

As we head into 2025–26, we’re expanding staff training, refining agent ‘skills’, and building metrics to assess impact. We know this is a long-haul project – five years at least – but it feels like the right direction.

The GenAI systems that students and teachers are often using in college were in the main designed mainly by engineers, developers, and commercial actors. What’s missing is the educator’s voice. Our work is about inserting that voice: shaping AI not just as a tool for efficiency, but as an ally for reflection, questioning, and deeper thinking.

The challenge is to keep students out of what I’ve called the ‘Cognitive Valley’, that place where understanding is lost because thinking has been short-circuited. Good pedagogical AI can help us avoid that.

We’re not there yet. Some results are excellent, others uneven. But the work is underway, and the potential is undeniable. The task now is to make GenAI fit our context, not the other way around.

Jonathan Sansom

Director of Digital Strategy,

Hills Road Sixth Form College, Cambridge

Passionate about education, digital strategy in education, social and political perspectives on the purpose of learning, cultural change, wellbeing, group dynamics, – and the mysteries of creativity…

Software / Services Used

| Microsoft Copilot Studio | https://www.microsoft.com/en-us/microsoft-365-copilot/microsoft-copilot-studio |

Keywords

AI ethics, AI in education, Cognitive Valley, Custom AI, Digital Strategy, EdTech, Future Skills, Generative AI, Microsoft Copilot, Pedagogy First, Teaching and Learning