From Individual Questions to Collective Practice

Since September, the GenAI:N3 blog has hosted a weekly series of reflections exploring what generative AI means for higher education: for teaching, learning, assessment, academic identity, and institutional responsibility. Early contributions captured a sector grappling with disruption, uncertainty, and unease, asking difficult questions about trust, integrity, creativity, and control at a moment when generative AI arrived faster than policy, pedagogy, or professional development could respond.

As the series unfolded, a clear shift began to emerge. Posts moved from individual reactions and early experimentation towards more structured sense-making, discipline-specific redesign, and crucially shared learning. The introduction of communities of practice as a deliberate strategy for AI upskilling marked a turning point in the conversation: from “How do I deal with this?” to “How do we learn, adapt, and shape this together?” Taken as a whole, the series traces that journey from disruption to agency, and from isolated responses to collective practice.

What makes this series distinctive is not simply its focus on generative AI, but the diversity of voices it brings together. Contributors include academic staff, professional staff, educational developers, students, and sector partners, each writing from their own context while engaging with a set of common challenges. The result is not a single narrative, but a constellation of perspectives that reflect the complexity of teaching and learning in an AI-shaped world.

29th September 2025 – Jim O’Mahony

Something Wicked This Way Comes

The GenAI:N3 blog series opens with a deliberately unsettling provocation, asking higher education to confront the unease, disruption, and uncertainty that generative AI has introduced into teaching, assessment, and academic identity. Rather than framing AI as either saviour or villain, this piece invites a more honest reckoning with fear, denial, and institutional inertia. It sets the tone for the series by arguing that ignoring GenAI is no longer an option; what matters now is how educators respond, individually and collectively, to a technology that has already crossed the threshold into everyday academic practice.

6th October 2025 – Dr Yannis

3 Things AI Can Do for You: The No-Nonsense Guide

Building directly on that initial unease, this post grounds the conversation in pragmatism. Stripping away hype and alarmism, it focuses on concrete, immediately useful ways AI can support academic work, from sense-making to productivity. The emphasis is not on replacement but augmentation, encouraging educators to experiment cautiously, critically, and with intent. In the arc of the series, this contribution marks a shift from fear to agency, demonstrating that engagement with AI can be practical, purposeful, and aligned with professional judgement.

13th October 2025 – Sue Beckingham & Peter Hartley

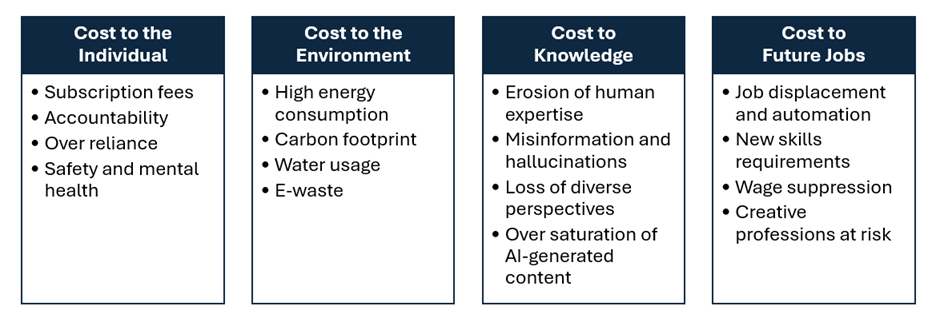

New Elephants in the Generative AI Room? Acknowledging the Costs of GenAI to Develop ‘Critical AI Literacy’

As confidence in experimentation grows, this post re-introduces necessary friction by surfacing the hidden costs of generative AI. Environmental impact, labour implications, equity, and ethical responsibility are brought into sharp focus, challenging overly simplistic narratives of efficiency and innovation. The authors remind readers that responsible adoption requires confronting uncomfortable trade-offs. Within the wider series, this piece deepens the discussion, insisting that values, sustainability, and social responsibility must sit alongside pedagogical opportunity.

20th October 2025 – Jonathan Sansom

Making Sense of GenAI in Education: From Force Analysis to Pedagogical Copilot Agents

Here the conversation turns toward structured sense-making. Drawing on strategic and pedagogical frameworks, this post explores how educators and institutions can move beyond reactive responses to more deliberate design choices. The idea of AI as a “copilot” rather than an autonomous actor reframes the relationship between teacher, learner, and technology. In the narrative of the series, this contribution offers conceptual tools for navigating complexity, helping readers connect experimentation with strategy.

27th October 2025 – Patrick Shields

AI Adoption & Education for SMEs

Widening the lens beyond universities, this post examines AI adoption through the perspective of small and medium-sized enterprises, highlighting the skills, mindsets, and educational approaches needed to support workforce readiness. The crossover between higher education, lifelong learning, and industry becomes explicit. This piece situates GenAI not just as an academic concern, but as a societal one, reinforcing the importance of education systems that are responsive, connected, and outward-looking.

3rd November 2025 – Tadhg Blommerde

Dr Strange-Syllabus or: How My Students Learned to Mistrust AI and Trust Themselves

Returning firmly to the classroom, this reflective account explores what happens when students are encouraged to engage critically with AI rather than rely on it unthinkingly. Through curriculum design and assessment choices, learners begin to question outputs, assert their own judgement, and reclaim intellectual agency. This post is a turning point in the series, showing how thoughtful pedagogy can transform AI from a threat to academic integrity into a catalyst for deeper learning.

10th November 2025 – Brian Mulligan

AI Could Revolutionise Higher Education in a Way We Did Not Expect

This contribution steps back to consider second-order effects, arguing that the most significant impact of AI may not be efficiency or automation, but a reconfiguration of how learning, expertise, and value are understood. It challenges institutions to think beyond surface-level policy responses and to anticipate longer-term cultural shifts. Positioned mid-series, the post broadens the horizon, encouraging readers to think systemically rather than tactically.

17th November 2025 – Kerith George-Briant & Jack Hogan

This Is Not the End but a Beginning: Responding to “Something Wicked This Way Comes”

Explicitly dialoguing with the opening post, this response reframes the initial sense of threat as a starting point rather than a conclusion. The authors emphasise community, dialogue, and shared responsibility, arguing that collective reflection is essential if higher education is to navigate GenAI well. This piece reinforces one of the central through-lines of the series: that no single institution or individual has all the answers, but progress is possible through collaboration.

24th November 2025 – Bernie Goldbach

The Transformative Power of Communities of Practice in AI Upskilling for Educators

This post makes the case that the most sustainable way to build AI capability in education is not through one-off training sessions, but through communities of practice that support ongoing learning, experimentation, and shared problem-solving. It highlights how peer-to-peer dialogue helps educators move from cautious curiosity to confident, critical use of tools, while also creating space to discuss ethics, assessment, and evolving norms without judgement. Positioned within the blog series, it serves as a bridge between individual experimentation and institutional change: a reminder that AI upskilling is fundamentally social, and that collective learning structures are one of the best defences against both hype and paralysis.

1st December 2025 – Hazel Farrell et al.

Teaching the Future: How Tomorrow’s Music Educators Are Reimagining Pedagogy

Offering a discipline-specific lens, this post explores how music education is being rethought in light of AI, creativity, and emerging professional realities. Rather than diluting artistic practice, AI becomes a catalyst for re-examining what it means to teach, learn, and create. Within the series, this contribution demonstrates how GenAI conversations translate into authentic curriculum redesign, grounded in disciplinary values rather than generic solutions.

8th December 2025 – Ken McCarthy

Building the Manifesto: How We Got Here and What Comes Next

This reflective piece pulls together many of the threads running through the series, documenting the collaborative process behind the Manifesto for Generative AI in Higher Education. It positions the Manifesto not as a prescriptive policy document but as a living statement shaped by diverse voices, shared concerns, and collective aspiration. In the narrative arc, it represents a moment of synthesis, turning discussion into a shared point of reference.

15th December 2025 – Leigh Graves Wolf

Rebuilding Thought Networks in the Age of AI

Moving from frameworks to cognition, this post explores how AI is reshaping thinking itself. Rather than outsourcing thought, the author argues for intentionally rebuilding intellectual networks so that AI becomes part of, not a replacement for, human sense-making. This contribution deepens the series philosophically, reminding readers that the stakes of GenAI are as much cognitive and epistemic as they are technical.

22nd December 2025 – Frances O’Donnell

Universities: GenAI – There’s No Stopping, Start Shaping!

The series culminates with a clear call to action. Acknowledging both inevitability and responsibility, this post urges universities to move decisively from reaction to leadership. The emphasis is on shaping futures rather than resisting change, grounded in values, purpose, and public good. As a closing note, it captures the spirit of the entire series: GenAI is already here, but how it reshapes higher education remains a choice.

With Thanks – and an Invitation

This series exists because of the generosity, openness, and intellectual courage of its contributors. Each author took the time to reflect publicly, to question assumptions, to share practice, and to contribute thoughtfully to a conversation that is still very much in motion. Collectively, these posts embody the spirit of GenAI:N3 – collaborative, reflective, and committed to shaping the future of higher education with care rather than fear.

We would like to extend our sincere thanks to all who have contributed to the blog to date, and to those who have engaged with the posts through reading, sharing, and discussion. The conversation does not end here. If you are experimenting with generative AI in your teaching, supporting others to do so, grappling with its implications, or working with students as partners in this space, we warmly invite you to write a blog post of your own. Your perspective matters, and your experience can help others navigate this rapidly evolving landscape.

If you would like to contribute, please get in touch (blog@genain3.ie) we would love to hear from you.

| Date | Title | Author | Link |

|---|---|---|---|

| 29 September 2025 | Something Wicked This Way Comes | Jim O’Mahony | https://genain3.ie/something-wicked-this-way-comes/ |

| 6 October 2025 | 3 Things AI Can Do for You: The No-Nonsense Guide | Dr Yannis | https://genain3.ie/3-things-ai-can-do-for-you-the-no-nonsense-guide/ |

| 13 October 2025 | New Elephants in the Generative AI Room? Acknowledging the Costs of GenAI to Develop ‘Critical AI Literacy’ | Sue Beckingham & Peter Hartley | https://genain3.ie/new-elephants-in-the-generative-ai-room-acknowledging-the-costs-of-genai-to-develop-critical-ai-literacy/ |

| 20 October 2025 | Making Sense of GenAI in Education: From Force Analysis to Pedagogical Copilot Agents | Jonathan Sansom | https://genain3.ie/making-sense-of-genai-in-education-from-force-analysis-to-pedagogical-copilot-agents/ |

| 27 October 2025 | AI Adoption & Education for SMEs | Patrick Shields | https://genain3.ie/ai-adoption-education-for-smes/ |

| 3 November 2025 | Dr Strange-Syllabus or: How My Students Learned to Mistrust AI and Trust Themselves | Tadhg Blommerde | https://genain3.ie/dr-strange-syllabus-or-how-my-students-learned-to-mistrust-ai-and-trust-themselves/ |

| 10 November 2025 | AI Could Revolutionise Higher Education in a Way We Did Not Expect | Brian Mulligan | https://genain3.ie/ai-could-revolutionise-higher-education-in-a-way-we-did-not-expect/ |

| 17 November 2025 | This Is Not the End but a Beginning: Responding to “Something Wicked This Way Comes” | Kerith George-Briant & Jack Hogan | https://genain3.ie/this-is-not-the-end-but-a-beginning-responding-to-something-wicked-this-way-comes/ |

| 24 November 2025 | The Transformative Power of Communities of Practice in AI Upskilling for Educators | Bernie Goldbach | https://genain3.ie/the-transformative-power-of-communities-of-practice-in-ai-upskilling-for-educators/ |

| 1 December 2025 | Teaching the Future: How Tomorrow’s Music Educators Are Reimagining Pedagogy | Hazel Farrell et al. | https://genain3.ie/teaching-the-future-how-tomorrows-music-educators-are-reimagining-pedagogy/ |

| 8 December 2025 | Building the Manifesto: How We Got Here and What Comes Next | Ken McCarthy | https://genain3.ie/building-the-manifesto-how-we-got-here-and-what-comes-next/ |

| 15 December 2025 | Rebuilding Thought Networks in the Age of AI | Leigh Graves Wolf | https://genain3.ie/rebuilding-thought-networks-in-the-age-of-ai/ |

| 22 December 2025 | Universities: GenAI – There’s No Stopping, Start Shaping! | Frances O’Donnell | https://genain3.ie/universities-genai-theres-no-stopping-start-shaping/ |