Source

The Irish Times

Summary

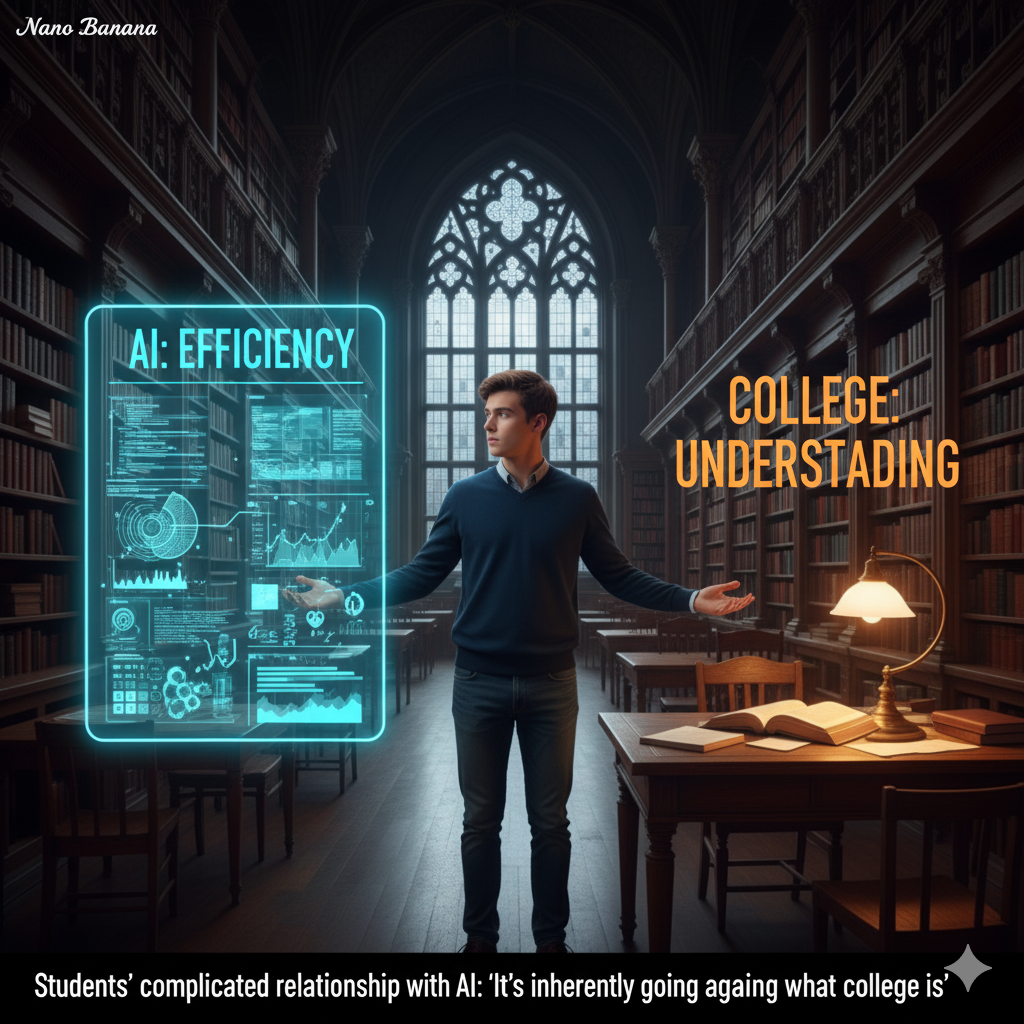

Many students express tension between using generative AI (GenAI) tools like ChatGPT and the traditional values of university education. Some avoid AI because they feel it undermines academic integrity or the effort they invested; others see benefit in using it for organising study, generating ideas, or off-loading mundane parts of coursework. Concerns include fairness (getting better grades for less effort), accuracy of chatbot-generated content, and environmental impact. Students also worry about loss of critical thinking and the changing nature of assignments as AI becomes more common. There is a call for clearer institutional guidelines, more awareness of policies, and equitable access and use.

Key Points

- Using GenAI can feel like “offloading work,” conflicting with the idea of self-learning which many students believe defines college life.

- Students worry about fairness: those who use AI may gain advantage over those who do not.

- Accuracy is a concern: ChatGPT sometimes provides false information; students are aware of this risk.

- Some students avoid using AI to avoid suspicion or accusation of cheating, even when not using it.

- Others find helpful uses: organising references, creating study timetables, acting as a “second pair of eyes” or “study companion.”

Keywords

URL

Summary generated by ChatGPT 5