Source

The Register

Summary

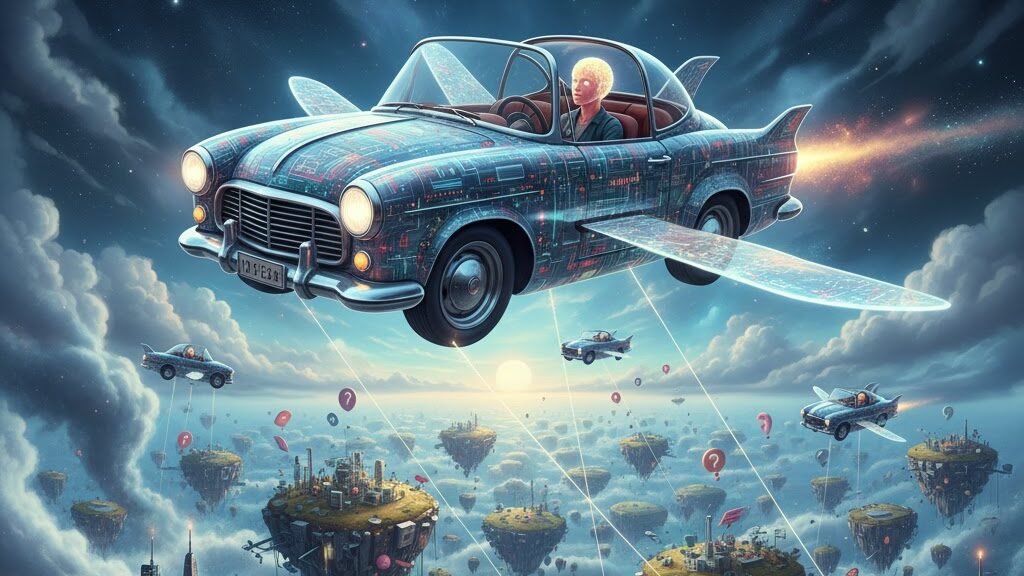

Mark Pesce likens artificial intelligence to the “flying car of the mind”—an alluring concept that few know how to operate safely. Drawing parallels with early computing, he argues that despite AI’s apparent intuitiveness, effective use requires deep understanding of workflow, data, and design. Pesce criticises tech companies for distributing powerful AI tools to untrained users, fuelling unrealistic expectations and inevitable failures. Without proper guidance and structured learning, most AI projects—like unpiloted flying cars—end in “flaming wrecks.” He concludes that meaningful productivity gains come only when users invest the effort to learn how to “fly” AI systems responsibly.

Key Points

- AI, like the personal computer once was, demands training before productivity is possible.

- The “flying car” metaphor captures AI’s mix of allure, danger, and complexity.

- Vendors overstate AI’s accessibility while underestimating the need for user expertise.

- Most AI projects fail because of poor planning, lack of data management, or user naïveté.

- Pesce calls for humility, discipline, and education in how AI tools are adopted and applied.

Keywords

URL

https://www.theregister.com/2025/10/15/ai_vs_flying_cars/

Summary generated by ChatGPT 5