Source

Ars Technica

Summary

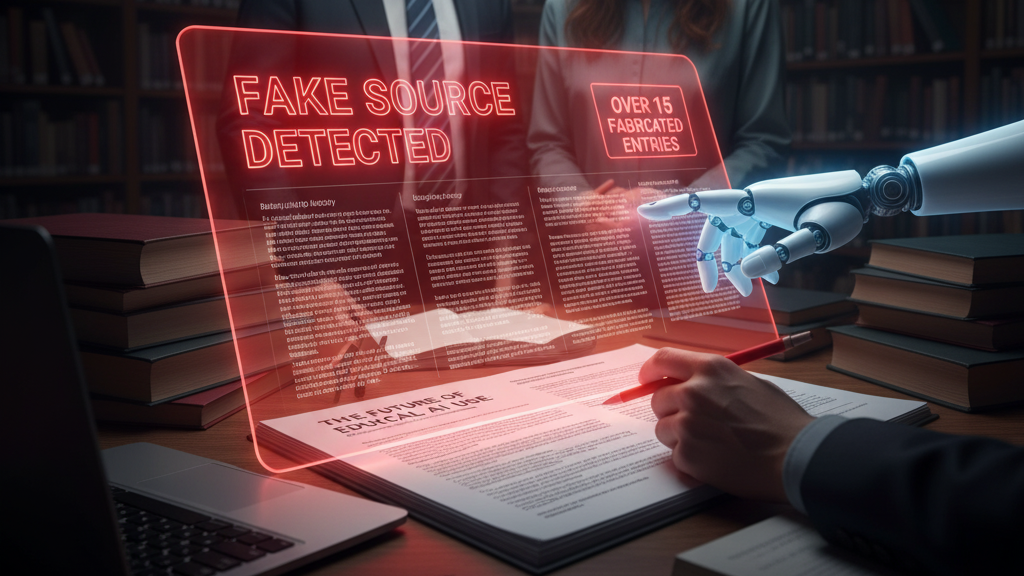

An influential Canadian government report advocating ethical AI in education was found to include over 15 fake or misattributed sources upon scrutiny. Experts examining the document flagged that many citations led to dead links, non-existent works, or outlets that had no record of publication. The revelations raise serious concerns about how “evidence” is constructed in policy advisories and may undermine the credibility of calls for AI ethics in education. The incident stands as a caution: even reports calling for rigour must themselves be rigorous.

Key Points

- The Canadian report included more than 15 citations that appear to be fabricated or misattributed.

- Some sources could not be found in public databases, and some journal names were incorrect or non-existent.

- The errors weaken the report’s authority and open it to claims of hypocrisy in calls for ethical use of AI.

- Experts argue that policy documents must adhere to the same standards they demand of educational AI tools.

- This case underscores how vulnerable institutional narratives are to “junk citations” and sloppy vetting.

Keywords

URL

Summary generated by ChatGPT 5