Source

Forbes

Summary

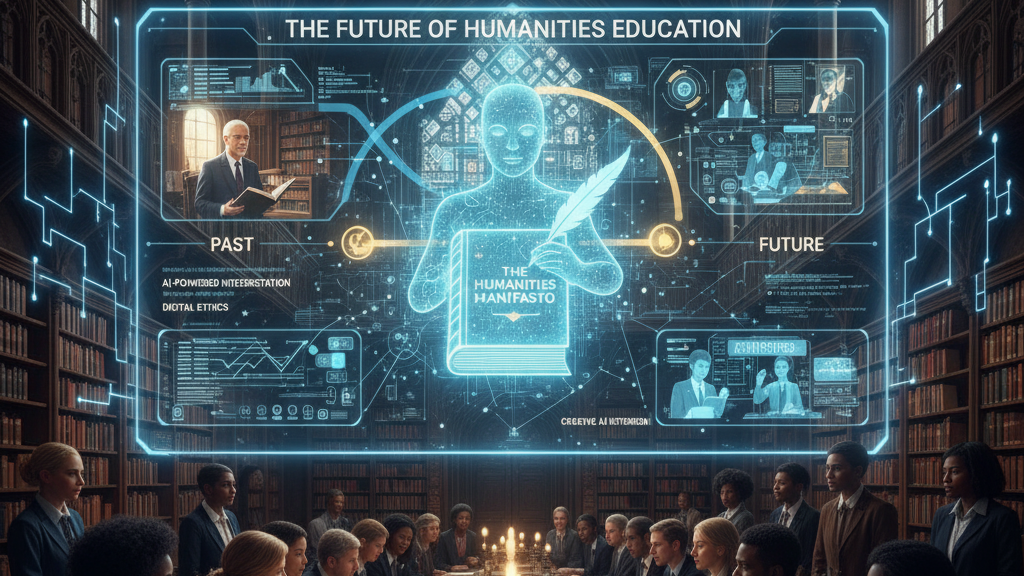

Mary Hemphill argues that while AI is rapidly changing technical and STEM fields, its impact on the humanities may be even more profound. She sees AI not just as a tool but a collaborator—helping students explore new interpretations, generate creative prompts, and push boundaries in writing, philosophy, or cultural critique. But this is double-edged: overreliance risks hollowing out the labour of thinking deeply, undermining the craft faculty value. Hemphill suggests humanities courses must adapt via “AI-native” pedagogy: teaching prompt literacy, interrogative reading, and critical layering. The goal: use AI to elevate human thinking, not replace it.

Key Points

- Humanities may shift from sourcing facts to exploring deeper interpretation, guided by AI-assisted exploration.

- Students should be taught prompt literacy—how to interrogate AI outputs, not accept them.

- “AI-native” pedagogy: adaptation of assignments to expect AI use, layered with critical human engagement.

- Overreliance on AI can weaken students’ capacity for independent thinking and textual craftsmanship.

- Humanities faculty must lead design of AI integration that preserves the values of the discipline.

Keywords

URL

Summary generated by ChatGPT 5