Source

Yale Daily News

Summary

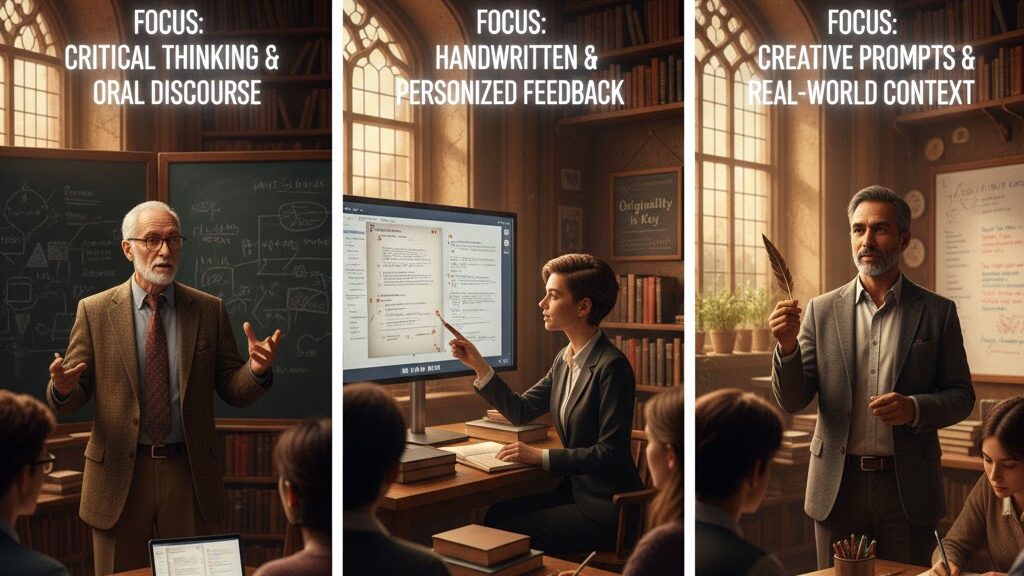

Without a unified departmental policy, Yale University’s English professors are independently addressing the challenge of generative AI in student writing. While all interviewed faculty agree that AI undermines critical thinking and originality, their responses vary from outright bans to guided experimentation. Professors Stefanie Markovits and David Bromwich warn that AI shortcuts obstruct the process of learning to think and write independently, while Rasheed Tazudeen enforces a no-tech classroom to preserve student engagement. Playwriting professor Deborah Margolin insists that AI cannot replicate authentic human voice and creativity. Across approaches, faculty emphasise trust, creativity, and the irreplaceable role of struggle in developing genuine thought.

Key Points

- Yale English Department lacks a central AI policy, favouring academic freedom.

- Faculty agree AI use hinders original thinking and creative voice.

- Some, like Tazudeen, impose no-tech classrooms to deter reliance on AI.

- Others allow limited exploration under clear guidelines and reflection.

- Consensus: authentic learning requires human engagement and intellectual struggle.

Keywords

URL

Summary generated by ChatGPT 5