Source

The Conversation

Summary

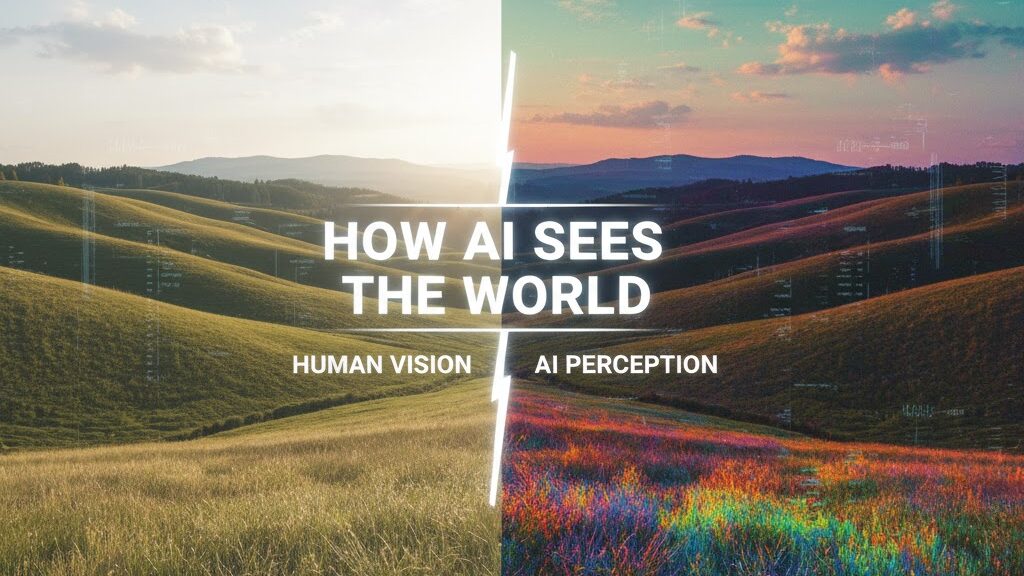

T. J. Thomson explores how artificial intelligence perceives the visual world in ways that diverge sharply from human vision. His study, published in Visual Communication, compares AI-generated images with human-created illustrations and photographs to reveal how algorithms process and reproduce visual information. Unlike humans, who interpret colour, depth, and cultural context, AI relies on mathematical patterns, metadata, and comparisons across large image datasets. As a result, AI-generated visuals tend to be boxy, oversaturated, and generic – reflecting biases from stock photography and limited training diversity. Thomson argues that understanding these differences can help creators choose when to rely on AI for efficiency and when human vision is needed for authenticity and emotional impact.

Key Points

- AI perceives visuals through data patterns and metadata, not sensory interpretation.

- AI-generated images ignore cultural and contextual cues and default to photorealism.

- Colours and shapes in AI images are often exaggerated or artificial due to training biases.

- Human-made images evoke authenticity and emotional engagement that AI versions lack.

- Knowing when to use AI or human vision is key to effective visual communication.

Keywords

URL

Summary generated by ChatGPT 5